Alerting in Google Cloud with Custom Log Based Metric and Cloud Monitoring

In the ever-evolving landscape of cloud security, the battleground has shifted to the trenches of real-time alert analysis. For security teams, wading through a deluge of notifications is no longer enough. They need a sophisticated, streamlined approach to identify genuine threats amidst the digital noise. This is where google cloud alerting comes in, wielding the power of intelligent automation to sift through mountains of data, illuminate critical anomalies, and empower security personnel to act swiftly and decisively. Buckle up, as we embark on a journey into the realm of cloud alerting, exploring its potential to transform security posture and safeguard your cloud assets like never before.

Google Cloud Alerting Methods: A Quick Tour

Navigating the vast sea of data in Google Cloud requires a keen eye and a swift hand. That’s where alerting comes in, acting as your vigilant lookout, notifying you when metrics breach thresholds, logs reveal anomalies, or uptime checks scream of trouble. But with so many options at your fingertips, which method should you choose? Let’s explore the main contenders:

1. Metric-Based Alerting: This heavyweight champion monitors your system’s vitals, like CPU usage or storage capacity. Define thresholds, and boom! You’re notified if things get too hot (or cold).

2. Log-Based Alerting: This detective combs through your logs, searching for suspicious patterns or specific keywords. Think security threats or application errors, all caught red-handed.

3. Uptime Checks: This watchdog keeps an eye on your external services, ensuring they’re alive and kicking. No more downtime surprises, just proactive alerts before users start yelling.

4. Custom Alerting: Feeling creative? Craft your own alerts using Monitoring API or Cloud Functions. Integrate with external tools, build complex logic, and tailor-make your alerting to your specific needs.

Bonus Round: Don’t forget the notification channels! Email, SMS, Slack, PagerDuty — choose your poison and ensure alerts reach you loud and clear, no matter where you roam.

Remember, the best approach is often a mix-and-match. Use metrics for performance, logs for security, and uptime checks for peace of mind. Then, sprinkle in some anomaly detection and custom alerts for good measure. With the right combination, your Google Cloud environment will be a fortress of awareness, always one step ahead of trouble.

Lets get some hand’s on for a specific requirement where we want to get an alert for a Cloud Armor Policy that blocks specific IPs . The ask is that if the same IP appears x times in y minutes, then there should be an alert for the same via Email or Slack. Lets assume we have the below setup in place

You will find the below logs in Cloud logging specifically for the Cloud Armor policy that is blocking the endpoint access. In event you are not seeing the logs, please ensure that you have enabled the logs for the backend service associated to load balancer and also enabled the audit logs with details for Cloud Armor Policy.

The remoteIP is the IP of the source that we want to use to identify if it has been block x number of times(say 5 time) in last y minutes(say 1 minute).

{

"insertId": "tyvh8vfi0k1di",

"jsonPayload": {

"remoteIp": "ab.cde.fgh.hi",

"backendTargetProjectNumber": "projects/abc",

"@type": "type.googleapis.com/google.cloud.loadbalancing.type.LoadBalancerLogEntry",

"statusDetails": "denied_by_security_policy",

"cacheDecision": [

"RESPONSE_HAS_CONTENT_TYPE",

"REQUEST_HAS_IF_NONE_MATCH",

"CACHE_MODE_USE_ORIGIN_HEADERS"

],

"enforcedSecurityPolicy": {

"priority": 1000,

"outcome": "DENY",

"configuredAction": "DENY",

"name": "block-all-http-requests"

}

},

"httpRequest": {

"requestMethod": "GET",

"requestUrl": "http://xxx.xxx.xxx.xxx/url",

"requestSize": "488",

"status": 403,

"responseSize": "258",

"userAgent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36",

"remoteIp": "ab.cde.fgh.hi",

"latency": "0.102957s"

},

"resource": {

"type": "http_load_balancer",

"labels": {

"target_proxy_name": "http-lb-proxy",

"project_id": "project_id",

"zone": "global",

"url_map_name": "http-load-balancer",

"forwarding_rule_name": "http-content-rule",

"backend_service_name": "backend-service"

}

},

"timestamp": "2023-12-25T07:17:32.061039Z",

"severity": "WARNING",

"logName": "projects/project_id/logs/requests",

"trace": "projects/project_id/traces/15dc480f7c7879c404b6b33843099866",

"receiveTimestamp": "2023-12-25T07:17:33.457621996Z",

"spanId": "25c549956d7c28e2"

}As you are able to see the deny logs flowing in the Cloud Logging, the next step is to build the query to identify the IP address and its count within a specific timeframe. For this we will need to use Log based Metric and Alerting.

We will 1st have to create a metric and associate the field that contains the IP address as a label for that metric.

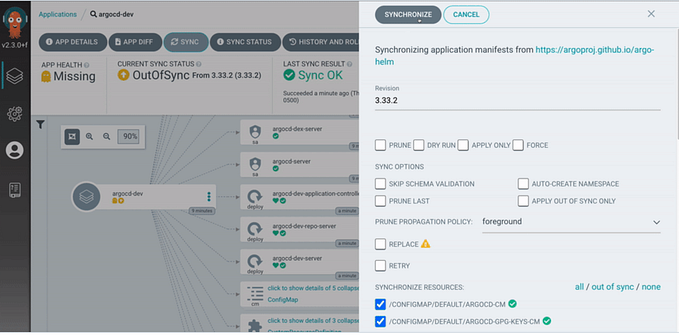

Go to Cloud Logging and filter out the Cloud Armor Logs associated with the Cloud Armor Policy and the Load Balancer. In the below exampel , you will see the 2 filters i.e. Cloud Armor Policy Name and Load Balancer resource type.

Click on Create Metric and select the below details:

Metric Type as Counter. You can read more here between Counter and Distribution.

Provide Details and select Units as 1(Since we selected Metric Type as Counter)

The Build filter condition will be auto populated with the filter condition that we used.

Click on Add Label and provide values as shown below:

We need to select the field that will contain the IP address of the remote server and the regex pattern if we need to extract data from the value. In our case , since we have value in the field itself, we use (.*) to select the entire value.

Ensure you click on Done button and then click on Create metric button.

The Metric creation and its associated data population take few minutes. So wait for few minutes once this step is completed.

After a few minutes, click on Log-based Metrics.

Click on 3 dots at the end.

If you do not see any data, wait for some time for the data to be mapped. It may take few minutes.

When you see the data, Click on Aggregation by and select the Metric Label i.e. “IP_Alert ” that we created above.

You will the different IPs and the correspending counts against them in the last selected timeframe. Now click on MQL at the top right.

You will see that the MQL has been generated based on the query that we built. You can read more about MQL here.

We will modify the MQL to have our requirement fulfilled of checking every z minutes(say 1 minute) within a y timeframe(say 5 minutes). The pipes used in the query filters data at every stage and passes to the next stage.

fetch l7_lb_rule => fetches data for the load balancer logs

metric ‘logging.googleapis.com/user/IP_Block_Alert’ => Selects this metric from the logs

every 1m => This query will run every 1 minute

group_by [metric.IP_Alert],sliding(5m), [value_IP_Block_Alert_aggregate: sum(value.IP_Block_Alert)] => For a sliding window of 5 minutes, perform a group by operation on IP_Alert metric(IP address) and perform a summation of the total occurrences.

We need to finally add the filter condition of threshold at the end i.e. how many occurrences after which alert needs to be raised.

Now copy this MQL and goto Alerting.

Click on Create Policy at the top.

Click on MQL and paste the MQL that we copied earlier.

Click on Run Query and validate the output. Here you will see all the output and not just above the threshold. Also you will need to change the last criteria from filter to condition.

The Alter trigger maps to the condition that we have in the query i.e. > 5

Create a Notification Channel for Email/SMS/Slack etc.

Select the channel that you created above.

Now you can configure the documentation section on what message you want to pass in the alert. You will want to capture the actual IP address in the Email or Slack body of the alert. This can be done by accessing the values via ${metric.label.KEY} as shown below

Review the changes and click on Create Policy

Finally you will see the Policy getting created and getting the alerts with desired output.

Hope you found this useful from SecOps perspective. We will see another approach on how this can be achieved using Log Analytics as well.

Thanks and Happy GCPing!